This article is the part of the series related to mastering DP-500 certification exam: Designing and Implementing Enterprise-Scale Analytics Solutions Using Microsoft Azure and Microsoft Power BI

Table of contents

Your company is planning to adopt (or already adopted) Azure Synapse Analytics, as a one-stop-shop for all the analytic workloads, and now you’re wondering how to leverage certain parts of this robust platform to best serve your analytic needs? Keep reading and I promise that by the end of this article you’ll have a clear picture, in which business scenarios to choose one (or more) of Synapse’s pools.

10.000 Feet high overview of the Synapse Pools

In one of my previous articles, I’ve already explained the Synapse architecture from a high-level perspective. The core part of the platform are two SQL-based pools: Dedicated and Serverless SQL pools, while there is also a Spark pool for developers familiar with this open source engine mostly used for data engineering and machine learning tasks.

In the meantime, Synapse got a new “analytic buddy” in Azure Synapse Data Explorer (which is still in preview at this moment), that enables you to analyze log and telemetry data at scale.

In this series of articles published previously, I’ve already explained the technical aspects of both Dedicated and Serverless SQL pools. Not just that, I’ve also examined how each of these pools can be used in synergy with Power BI to enable a seamless reporting experience over large amounts of data. So, I strongly encourage you to walk through these articles if you want to understand how Synapse SQL pools work behind the scenes, especially in conjunction with Power BI.

Finally, in this series for mastering the DP-500 exam, I’ve also written articles on how you can quickly turn data into insight, by creating visualizations directly within the Synapse Studio – a web-based interface used for working with Synapse pools!

So, the main idea of this article is NOT to provide you with the technical aspects of Dedicated and Serverless SQL – instead, the focus will be on understanding in which business scenarios to use each of them.

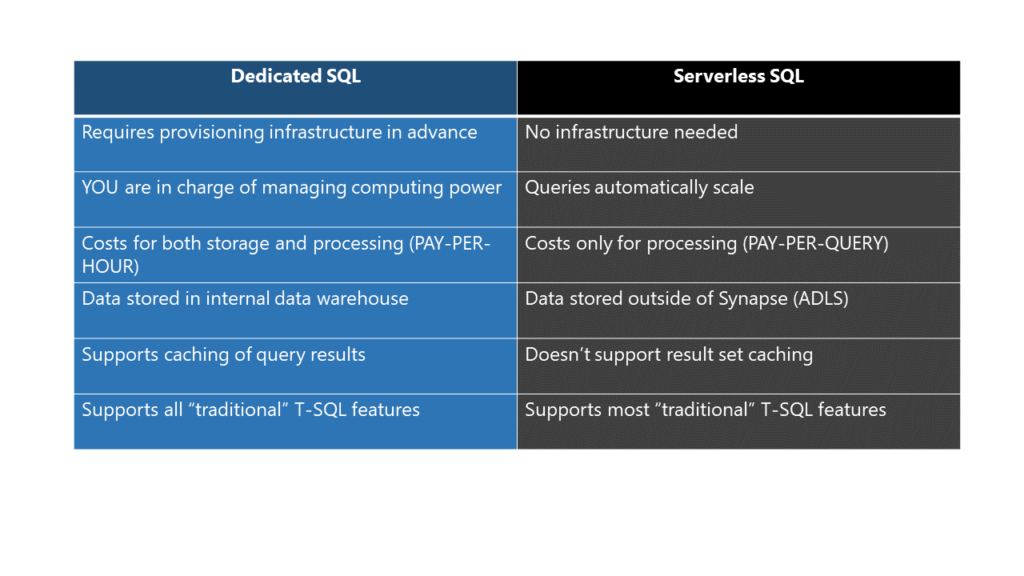

However, before we dive into use cases for Synapse SQL pools, let’s try to understand the key differences between these two options:

Obviously, this is a simplified comparison and you should definitely check the official docs for a more detailed overview of the features that are currently (not) supported in each of the pools. You should also check the blog and YouTube channel of my friend Andy Cutler, who shares fantastic resources about Azure Synapse Analytics.

Ok, let’s now focus on various real-life scenarios and consider which Synapse pool to choose for each of them…

#1 Quick data exploration of the data in ADLS

Answer: Serverless SQL pool – If you want to get a quick insight into the data stored within the ADLS, Serverless SQL enables you to do just that – even more, by writing a familiar T-SQL code! This way, you can avoid time-consuming ETL processes and reduce time-to-analysis

#2 Quick data exploration of the data stored in Dataverse or Cosmos DB

Answer: Serverless SQL pool – If you need to analyze the data stored in either Microsoft Dataverse or Azure Cosmos DB (analytical storage in Cosmos DB), you can query this data directly using Azure Synapse Link. On the flip side, to be able to query this data using a Dedicated SQL pool, you have to load it in the first place

#3 Querying data stored in Delta Lake format

Answer: Serverless SQL pool – Delta Lake format is still not supported in Dedicated pool, while you can read these files using Serverless

#4 Creating persistent database objects

Answer: Dedicated SQL pool – Since a Serverless pool comes with no dedicated storage, you can’t create persistent database objects, such as tables or materialized views. Serverless supports creating only metadata objects (for example, views or external tables, where the data resides outside of Synapse). Additionally, creating temporary tables, albeit possible, comes with many limitations compared to “traditional” temporary tables that you maybe know from SQL Server or similar RDBMSs

#5 Many concurrent users querying the data

Answer: Dedicated SQL pool – As we’ve already learned, in a Serverless SQL pool, you’re paying for the amount of data processed. So, if your workload assumes that there are many concurrent users querying the data, you should be better off storing that data in the Dedicated pool and paying only predictable costs

#6 DirectQuery requirements in Power BI

Answer: Dedicated SQL pool – Let’s say that one of the business requirements when planning your Power BI architecture is using DirectQuery storage mode. You can read more about the DirectQuery storage mode in this article, and in which scenarios it makes sense to go this way. Since Power BI will generate a separate query for every single visual on the report page, it can easily happen that there are dozens of queries targeting the underlying data source. If you recall that Serverless SQL is a typical PAY-PER-QUERY model (meaning, more queries, more money out of the pocket), it’s quite obvious why you should stick with a Dedicated pool in this scenario

#7 Performance

Answer: It depends😉 – This one is the most complex consideration, so obviously there is no single correct answer. Depending on the various factors, the same query may perform better on the Dedicated or Serverless pool respectively. So, let’s try to demystify some of the most common performance considerations (again, please keep in mind that this is just a general and simplified overview, and reliable conclusions can be made only after thorough evaluation and testing) for each of the pools:

Dedicated SQL pool performance enhancements

- Use materialized views – encapsulate complex query logic in the form of the persistent database object, so it can be reused multiple times later and data can be retrieved faster

- Use a proper table distribution option – there are 3 table distribution options in the Dedicated pool

- Round-Robin – default type. Works best for the staging tables when you need fast data loading

- Hash – uses a specific column for data distribution and then applies a hash algorithm to deterministically assign each row to one distribution. Works best for large fact tables to speed up joins and aggregations

- Replicate – keeps the replica of the whole table on each compute node. This means, no need to transfer the data between the nodes during the query processing. Works best for smaller tables, such as dimension tables

- Keep statistics up to date – in a nutshell, statistics help the engine to come up with the optimal execution plan for your queries. You should check if the AUTO_CREATE_STATISTICS option is turned on (as per default settings)

- Carefully plan partition strategy – if your partitions contain less than 1 million rows, it might have a negative impact on the clustered columnstore index efficiency. A dedicated SQL pool will automatically partition your data into 60 databases (each of 60 nodes in the MPP architecture). Therefore, if you create 100 partitions on the table, you will have 6000 partitions in total distributed on the nodes!

Serverless SQL pool performance enhancements

- Use Parquet format whenever possible – Parquet is a columnar format, that stores the data in a compressed way, where each column is physically separated from other columns. This brings many performance benefits – most notable – the engine will be able to skip unnecessary data from scanning (only columns that are part of the query will be scanned), while also the memory footprint of the parquet file will be a few times smaller than CSV. Writing exactly the same query to retrieve exactly the same data from CSV vs parquet file, may produce a significant difference in the amount of data scanned and, consequentially, in the amount of money you’re going to be charged

- Keep CSV file size between 100 MB and 10 GB – in case you’re dealing with larger files, split them into multiple smaller files and use FILEPATH and FILENAME functions to target only necessary data. In addition, manually create statistics for CSV files, especially for columns used in joins, WHERE, ORDER BY, and GROUP BY clauses

- Consider adjusting inferred data types – the built-in schema inference feature enables you to quickly get up and running with writing queries, but this feature comes with a price – and the price is potentially inadequate column data types. For example, the parquet file doesn’t contain metadata about the maximum character column length, so Serverless SQL will automatically set these columns as varchar(8000), even though you have a column containing, let’s say, zip codes, where varchar(20) will be completely sufficient

- Leverage CETAS to optimize frequently run queries – this is one of the key features of Serverless SQL. Let’s say that you have a frequently run query with many joins – you can materialize the result of this query to a new set of files (CETAS creates a set of parquet files in your ADLS), so you can then use this single external table as a reference in all subsequent queries

Conclusion

Of course, these are all just recommended practices. In other words, it doesn’t necessarily mean that you should blindly follow any of these recommendations without taking into account additional factors.

A decision on which Synapse pool to use in a certain real-life scenario is usually dependent on the combination of the multiple consideration points we elaborated on in this article. In the end, it’s up to you as an enterprise data analyst or architect to evaluate the significance of each of these items and, depending on the overall conclusion (performance vs cost-efficiency) determine the best value-for-money implementation strategy.

Thanks for reading!

Last Updated on January 25, 2023 by Nikola

Tom

Great article!

Miguel

This was a super useful post! Thanks a lot Nikola!