In the previous few weeks, I was working with a client to migrate the existing Power BI semantic models, which were using Import mode, to Direct Lake mode in Microsoft Fabric.

If you’re wondering what is Direct Lake mode and how it works behind the scenes, I suggest you start by reading this article.

Currently, Direct Lake mode comes with a certain set of limitations, so depending on which of the features your current import mode semantic models leverage, the migration process can be more or less “painful”. The purpose of this article is to help you understand potential caveats and overcome various obstacles on your Direct Lake migration journey.

This article also serves as the homage to the wonderful work of Michael Kovalsky (LI). In case you missed it, Michael already did a tremendous job for all Power BI (and Tabular model in general) practitioners, by creating the Best Practice Analyzer and Report Analyzer tools. Now, Michael also created a set of scripts to help streamline the Direct Lake migration process, by leveraging a Semantic Link feature in Microsoft Fabric. You can find all the scripts at Michael's Github account: https://github.com/m-kovalsky/Fabric

In these previous weeks, while working on the migration task, I was constantly “bothering” Michael with questions, requests, and issues I was facing during the process. Sometimes, this was happening in “strange” hours, or during the weekends, but Michael always responded quickly, and, more importantly, provided fixes and improvements immediately. Huge THANKS for this!

Setting the stage

Here is my scenario: there is an existing Power BI semantic model in import mode (in reality, there are many of them, but for the sake of keeping things simple for this blog post, let’s pretend that there is only one semantic model to be migrated).

This is how my report looks like (don’t pay attention to the visual side – here, functionality is our focus):

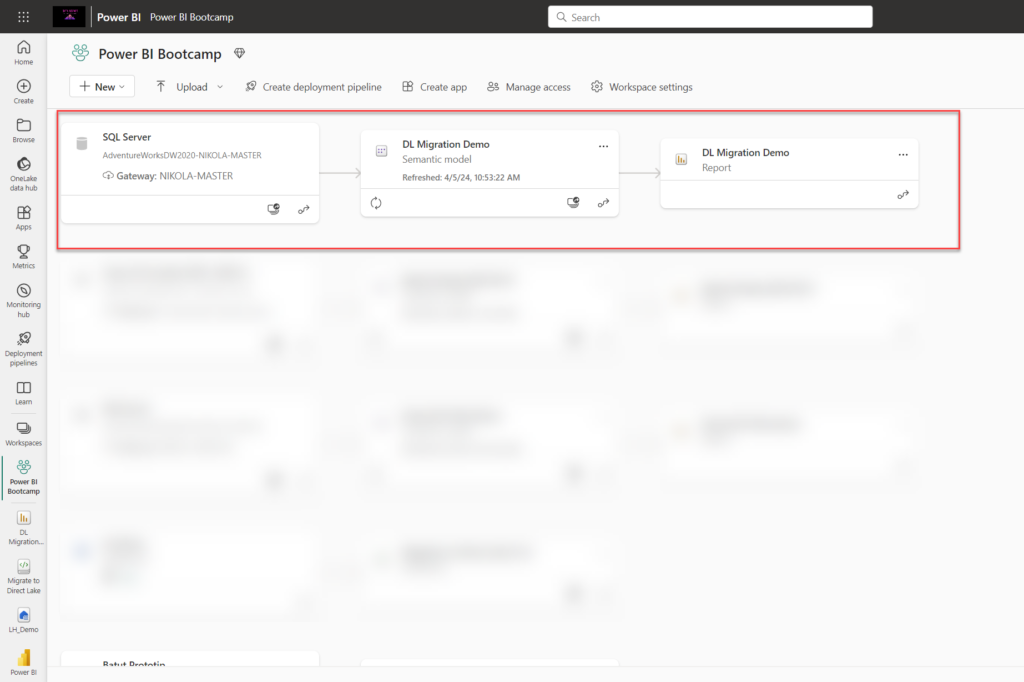

If I open the lineage view, I can confirm that the report connects to the semantic model which is based on the data coming from my Adventure Works SQL Server database:

Scenario

Our plan is to have this same report running on top of the Direct Lake semantic model, but WITHOUT the need to rebuild the report from scratch! So, we need to ensure that everything is in place and that we can simply rebind the existing report to the Direct Lake model and that it – just works!

Question #1: Do you have the data already in OneLake?

The first thing you must consider is: do you already have the semantic model data available in OneLake (in the form of delta tables) or not? In case you don’t have it, Michael’s script has you covered and you can export the semantic model as a Power Query template file (.pqt) and then load it with Dataflows Gen2 into your Fabric Lakehouse/Warehouse. This step will create delta tables for each of the tables from the semantic model. The entire process is described here.

The other scenario is that you already have this data available in OneLake (maybe as a part of another ETL process). And, this was exactly my case with the client. But, things are usually not straightforward in real life:)…So, tables in OneLake have different names than the ones which are in the semantic model. Think of the following naming convention: schema name from the source database_table name (i.e. aw_dimcustomer, aw_dimproduct, etc.). Therefore, I didn’t want to leverage .pqt and create redundant data in my lakehouse, since the data was already there.

Remark: Michael’s package of scripts contains a dozen useful functions, but you might not necessarily need all of them. I suggest you check and list all the available functions and decide which of them to run in your own scenario

Let’s start our migration journey!

Question #2: Is your semantic model in the same workspace as Lakehouse?

This was one of the obstacles at the beginning because the scripts were assuming that the Direct Lake semantic model AND the lakehouse reside in the same workspace. However, in real life, more often than not, you might want to keep lakehouse(s) in a separate workspace to semantic model(s) and/or reports. Therefore, Michael added the optional workspaceName parameter for each function. If you don’t specify the value for workspaceName, it will default to the workspace of the lakehouse which is attached to a notebook.

Step #1 – Create a blank Direct Lake semantic model

Once you install the latest .whl package from here, the first step is to create a blank Direct Lake semantic model.

import fabric_cat_tools as fct newDatasetName = 'DL Migration Demo DL' #Enter the new Direct Lake semantic model name fct.create_blank_semantic_model(newDatasetName, workspaceName='Power BI Bootcamp')

Please, pay attention that I specified the workspace in which I want my new Direct Lake model to reside.

At this moment, this is just a blank semantic model, that doesn’t contain any objects, such as tables, relationships, measures, etc.

Step #2 (Optional) – Migrate calculated tables to a lakehouse

In case you have any calculated tables in your Import semantic model (for example, DAX Calendar table), you need to migrate these tables to OneLake first, because Direct Lake models currently don’t support calculated tables and calculated columns. Therefore, these tables should first be “materialized” as delta tables in the lakehouse, before leveraging them in the Direct Lake model.

Since I don’t have any calculated tables in my model (except the Field parameter table, but more on that later in the specific migration step), I skipped this step in my migration process.

Step #3 – Migrate tables and columns from the existing import model to the Direct Lake model

In this step, there was another challenge with workspaces, so Michael extended his script to include additional optional parameters to specify: lakehouseName and lakehouseWorkspaceName.

fct.migrate_tables_columns_to_semantic_model('DL Migration Demo', 'DL Migration Demo DL', workspaceName= 'Power BI Bootcamp', lakehouseName= 'LH_Demo', lakehouseWorkspaceName='Data')

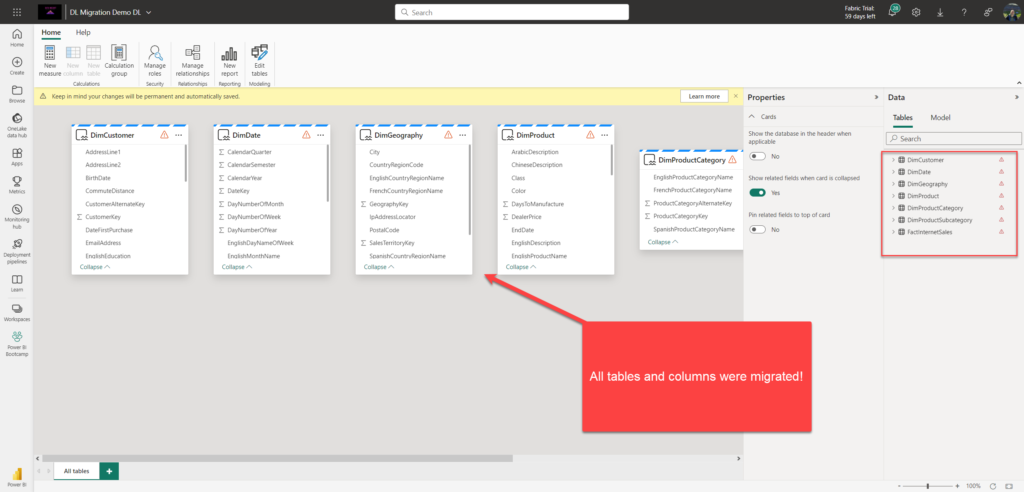

After running this cell, let’s check our Direct Lake model.

Amazing! All tables and columns were successfully created in my Direct Lake model.

Step #4 (Optional) – Migrate calculated tables to a semantic model

In case you have calculated tables in the original semantic model, and you created them as delta tables in lakehouse in Step #2, now it’s time to migrate them from the lakehouse to the Direct Lake semantic model. Since I don’t have calculated tables, I’ve skipped this step.

Step #5 – Migrate model objects

This is one of the crucial steps in the migration process. Usually, your semantic model is not a bunch of disconnected tables, but a well-designed Star schema (hopefully:)) Therefore, relationships are the core component of each real-life model. Next, measures…Let’s say that your current Import model contains hundreds of measures. Re-creating them in the Direct Lake model would require a lot of effort, unless there is a way to automate their recreation. Also, other model objects, such as Calculation groups, RLS roles, perspectives, etc. are also migrated in this step.

fct.migrate_model_objects_to_semantic_model('DL Migration Demo', 'DL Migration Demo DL', workspaceName= 'Power BI Bootcamp')

Fantastic! Almost there!

Step #6 – Refresh the Direct Lake semantic model

If you’ve read my previous article about “50 Shades of Direct Lake”, you may recall that there is no “real” data refresh happening with Direct Lake – it’s metadata refresh only. Still, we need to ensure that the latest metadata is included in the model:

fct.refresh_semantic_model('DL Migration Demo DL', workspaceName='Power BI Bootcamp'

Step #7 – Add Field Parameter

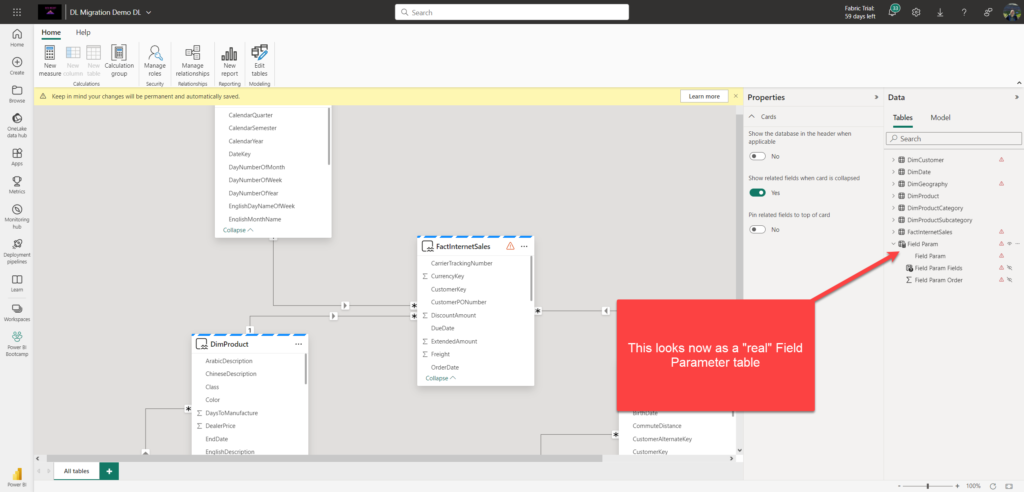

So far, things have been quite straightforward. However, the model I had to migrate for a client contains 8 field parameters, which are essentially a special type of the calculated table. At this moment, you definitely discovered that DAX calculated tables are unsupported in Direct Lake, so the original script version for Field Parameters was basically creating a regular table in the Direct Lake model and marked it as a calculated table (you can check this in Tabular Editor)

fct.add_field_parameter ('DL Migration Demo DL'

, tableName='Field Param'

, objects=["'DimProduct'[Class]","'DimProduct'[Color]","'DimGeography'[EnglishCountryRegionName]","'DimCustomer'[MaritalStatus]","'DimProductCategory'[EnglishProductCategoryName]"]

,workspaceName='Power BI Bootcamp' )

This function is slightly different than previous ones, because it requires an array of objects – these are, essentially, columns and/or measures that we want to use as field parameters.

Remark: The function I used above can be used for adding field parameters to a semantic model, but the package also provides a migrate_field_parameters function, which can migrate all field parameter tables in one run

Let me now open Tabular Editor and check how this table looks under the hood:

This looks ok, BUT…This is NOT a Field parameter and if you try to use fields from this table the same as with “regular” field parameters, you won’t be able, for example, to place the Field parameter as a Value in the Matrix visual. I told Michael about this issue and we realized that the missing piece was a special extended property on the hidden Fields column: it’s a JSON extended property called ParameterMetadata. When it’s set to: {“version”:3, “kind”:2}, this “tells” Power BI that it’s a field parameter table. Michael adjusted the add_field_parameter function in the script, so that the latest version takes care of this additional property.

Step #8 – Rebind the existing report

So, all these steps performed were just a kind of “warm-up” and a prerequisite for the ultimate goal we are trying to achieve – rebinding the existing report (the one on top of the Import semantic model) to a new Direct Lake model, so that it just works, without the need to recreate the report from scratch.

import fabric_cat_tools as fct reportName = 'DL Migration Demo' # Enter report name which you want to rebind to the new Direct Lake model fct.report_rebind(reportName, 'DL Migration Demo DL', workspaceName='Power BI Bootcamp')

PRO TIP: You can also rebind ALL the reports which connect to Import mode semantic model, by using the report_rebind_all function.

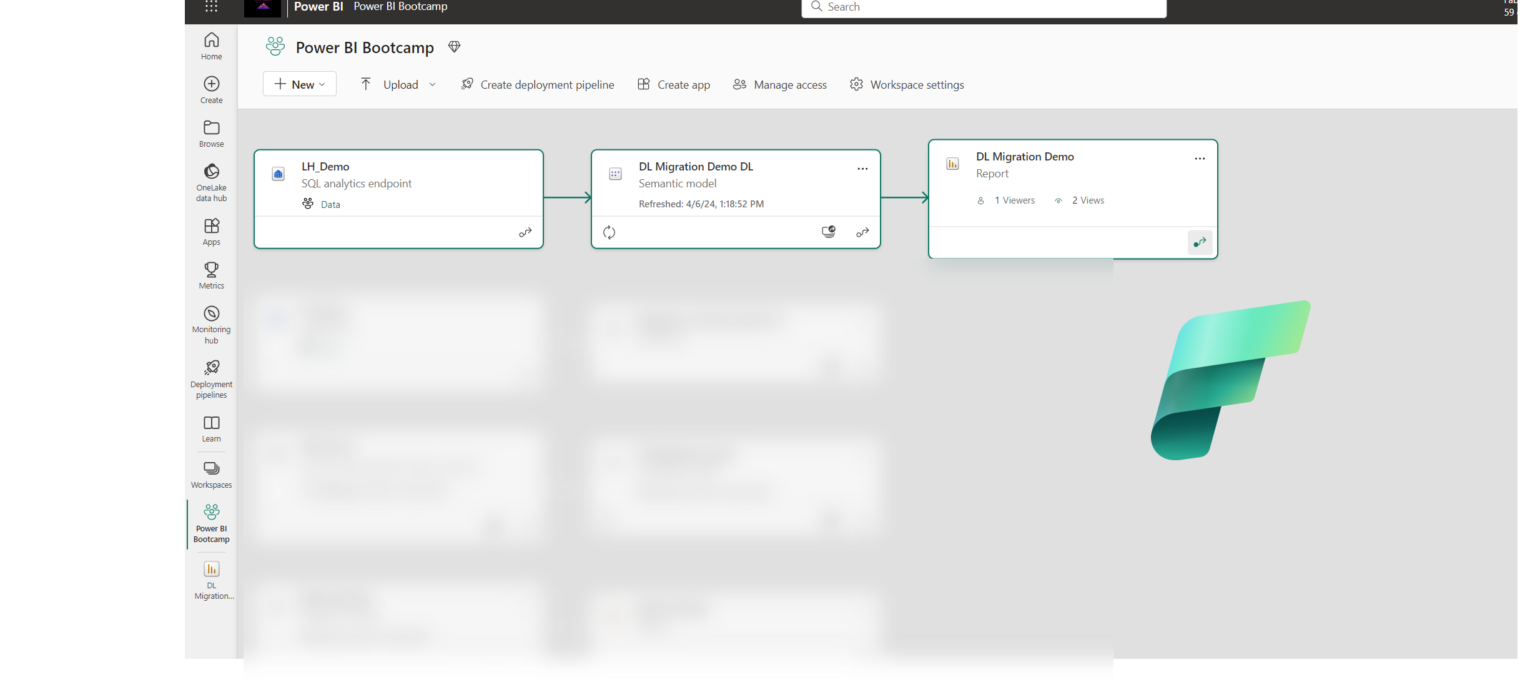

First, I’ll check if the function above really changed the report binding:

As you notice, the report now connects to the Direct Lake model, and the data source is the SQL Analytics Endpoint of my lakehouse.

Finally, let’s run the report and keep our fingers crossed that everything “just works”…

Ooops, this doesn’t look good:(…The error message states that there is no FactInternetSales table in our Direct Lake semantic model.

Step #9 – Mapping tables between semantic models

The error message you see above is quite clear. And, if you recall, our FactInternetSales table from SQL Server Adventure Works database, is actually aw_factinternetsales table in the lakehouse. Therefore, we need to rename entities in our Direct Lake semantic model, so that they point to the appropriate object in the lakehouse.

Let me quickly explain why this issue is happening in the first place. In Import mode, partitions are essentially M code statements. Since Direct Lake doesn’t support M code and partitions defined by M code, the script simply creates a Direct Lake partition (there can be only one single partition per table) with the same name. If you have a delta table with the same name in the lakehouse, then great! Everything will work! However, if there is no delta table with that name, the report will crash like in my scenario.

Now, you have two options here: you can either modify partition names on your own in Tabular Editor, or you can execute another Michael’s function and apply multiple changes at once. I’ll go with the latter option here…

fct.update_direct_lake_partition_entity('DL Migration Demo DL'

, tableName = ['FactInternetSales','DimCustomer','DimDate','DimGeography','DimProduct','DimProductCategory','DimProductSubcategory']

, entityName = ['aw_factinternetsales','aw_dimcustomer', 'aw_dimdate','aw_dimgeography','aw_dimproduct','aw_dimproductcategory','aw_dimproductsubcategory']

, workspaceName = 'Power BI Bootcamp'

, lakehouseName = 'LH_Demo'

, lakehouseWorkspaceName = 'Data')

Let’s apply these changes back to our semantic model and refresh its metadata. Now, I’ll check my report again…

TA-DA! Works like a charm!

Conclusion

We made it! Thanks to brilliant work from Michael Kovalsky, and the “magic” of the Semantic Link feature in Fabric, I was able to rebind existing Power BI reports that were using Import mode semantic models to Direct Lake semantic models – and all of that without recreating semantic models and reports from scratch!

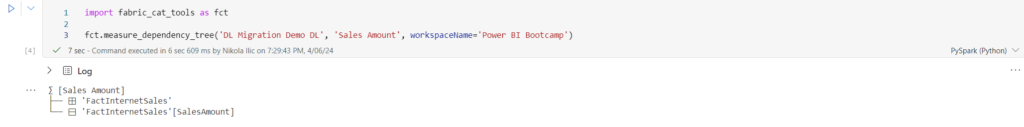

Go and check the entire package Michael created – it’s full of gems, and it’s not related to the Direct Lake migration scenario only. One of my favorites is measure_dependency_tree, which comes super handy when you have a complex model with a bunch of dependent measures:

Thanks for reading!

Last Updated on April 7, 2024 by Nikola

Aleksandar

Hi Nikola, great article again!

I’m more interested in what use cases are appropriate to do this migration observing all limitations of Direct Lake vs Import Mode?

Nikola

Thank you Aleksandar. Huh, decision if and when to migrate from import to Direct Lake depends on many different factors and should be evaluated in the wider scope. But, if you are happy with your import models, I see no reason for migrating them (yet).

Jonathan

Hi Aleks, just a quick question, I see Synonyms and Q&A isn’t really available in the Direct Lake Semantic Model. Is this by design or am I missing something?

KD

Thanks for this article. Very helpful. I am seeing 404 error for the migration of the data model to lake house. Is there any other link?

Sunay

Hi Nikola, Thank you for great article.

A question, is your connection to lakehouse is direct connection or SQL Endpoint connection?

Nikola

Hi Sunay,

It’s through the SQL Endpoint.

Cheers,

Nikola

Vivek

Hi Nikola,

Why did you choose to connect using the SQL endpoint ,wont live connection be a better option ?

Nikola

Hi Vivek,

Not sure which part of the article you are referring to, so maybe you can clarify a little bit more. Direct Lake model must connect to Lakehouse through the SQL analytics endpoint, because of the eventual fallback to DirectQuery behavior.

celestina Cann

Hi Nikola,

where did you get this? ‘fabric_cat_tools as fct’

I could not find the package you installed. The page returned error.

Thank you

Nikola

Ah, that’s the old version of the library. You should use Semantic Link Labs now: https://semantic-link-labs.readthedocs.io/en/stable/

Patel, Khyati

Hello,

I had followed your steps. However, as my model is big it generated 3 pkt files. So how can i handle that?

Edgar Silva

That is great but it does not work when the lake has a schema structure which is the case of most companies.

Is there any solution for that?