Few weeks ago, I was working on a business request to prepare data integration scenario for customer surveys. After initial conceptual phase and logical data modeling, during physical data modeling phase, I was thinking about certain data types which will satisfy both business needs and ensure best usage of SQL Server resources.

My suggestion was to go with Datetime2 datatype for all datetime columns, while my boss insisted on sticking with good old Datetime. He is an old-school guy, I thought, accustomed to use traditional data types, so I will prove him wrong and convince to switch to newer (and Microsoft recommended, by the way) data type.

Introduction

Before proceeding further, I need to briefly emphasize on main characteristics and differences between Datetime and Datetime2 data types.

Following table gives quick overview about those two:

| Type | Format | Accuracy | Storage | Range | Precision defined by user | ANSI SQL Compliant |

| Datetime | YYYY-MM-DD hh:mm:ss.nnn | 0.00333 sec | 8 bytes | 1753-01-01 to 9999-12-31 | No | No |

| Datetime2 | YYYY-MM-DD hh:mm:ss.nnnnnnn | 100 nanosec | 6 – 8 bytes | 0001-01-01 to 9999-12-31 | Yes | Yes |

Datetime

Datetime is “traditional” data type for storing data about date and time. It takes strictly 8 bytes of memory, 4 bytes for date part and 4 bytes for time part.

So, let’s check what is going on behind the scenes here:

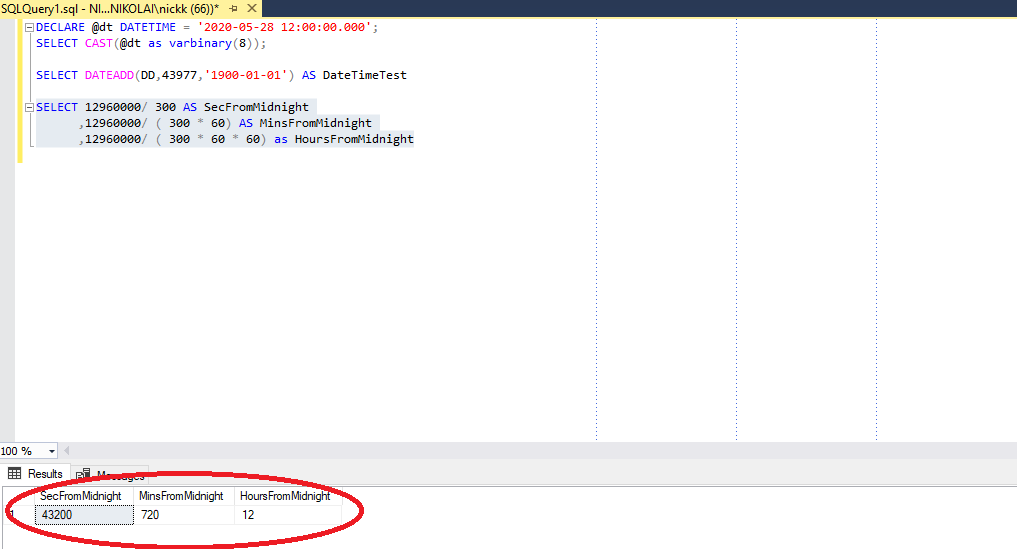

DECLARE @dt DATETIME = '2020-05-28 12:00:00.000'; SELECT CAST(@dt as varbinary(8));

We got following hexadecimal value: 0x0000ABC900C5C100

So, if we take first 8 characters (0000ABC9) and convert that to decimal system, we are getting 43977 integer value. This value shows number of days since 1900-01-01, which can be tested as follows:

SELECT DATEADD(DD, 43977, '1900-01-01') AS DateTimeTest

And we see that our previously “defined” date (2020-05-28) is there.

Lets check time part, by taking out last 8 characters (00C5C100). Converting this to decimal, gave me 12960000. This number represents number of clock ticks from midnight. And, since the accuracy is 1/300 seconds, we can do the following calculations in order to get more human readable results:

SELECT 12960000/ 300 AS SecFromMidnight ,12960000/ ( 300 * 60) AS MinsFromMidnight ,12960000/ ( 300 * 60 * 60) as HoursFromMidnight

Once we “add” this time part to previous date part, we get 2020-05-28 12:00:00.000, right as in the first declaration.

Datetime2

Datetime2 was introduced with SQL Server 2008, so it is here long enough to draw some comparisons with its “older brother”. Main difference is way of data storage: while in Datetime type, date comes first and then time, in Datetime2, 3 bytes in the end represents date part!

Let’s check with same data as previously:

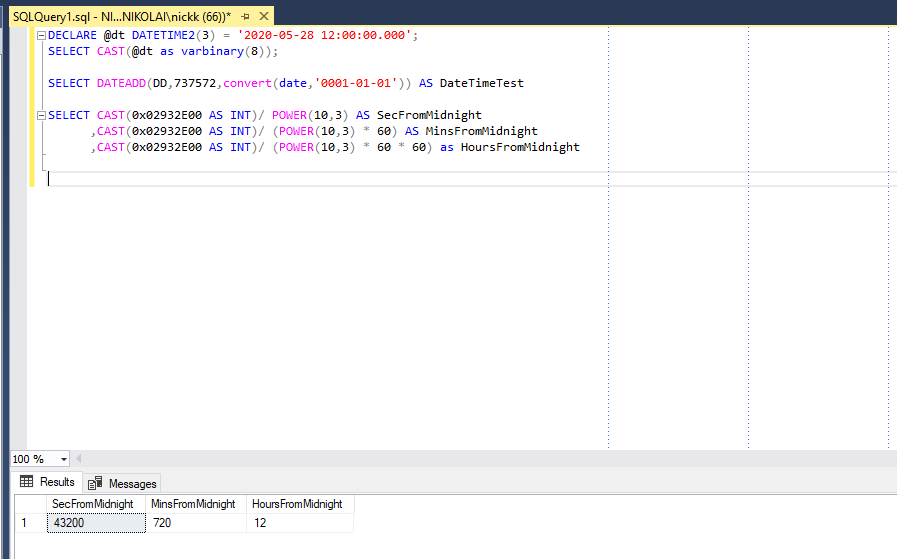

DECLARE @dt DATETIME2(3) = '2020-05-28 12:00:00.000'; SELECT CAST(@dt as varbinary(8));

Now, hexadecimal value is: 0x03002E930224410B

So, we are taking last 6 characters for date (3 bytes), but the rightmost byte is the most important within Datatime2, so it goes first: 0B4124. Converting this to decimal and we get: 737572. When we add this to a ‘0001-01-01’ starting point of Datetime2 data type, we are getting our date (2020-05-28) back:

SELECT DATEADD(DD,737572,convert(date,'0001-01-01')) AS DateTimeTest

For time part, things become more complicated, because it depends on defined precision. In our case, it’s 3 digit precision, but it can go up to 7 digits (which is the default, in case that we haven’t defined any specific value). Depending on precision, Datetime2 takes between 6 and 8 bytes of storage.

Back to our example:

SELECT CAST(0x02932E00 AS INT)/ POWER(10,3) AS SecFromMidnight ,CAST(0x02932E00 AS INT)/ (POWER(10,3) * 60) AS MinsFromMidnight ,CAST(0x02932E00 AS INT)/ (POWER(10,3) * 60 * 60) as HoursFromMidnight

Again, we got results as expected.

Back to our original scenario…

Ok, this was a brief overview of both datatypes. When creating tables, in many cases one can’t foresee amount of data which will be stored in specific table. Sometimes, you create a table believing that it won’t go above certain threshold, but after a while you realize that your assumptions were not correct.

However, we were definitely sure that this table will be BIG! When I say big, I’m talking about probably close to 100 million rows after year or two. And, back to our original request to create optimal tables, I was thinking that storing data as Datetime2 with 2 digits precision (which takes 6 bytes), multiplying with at least 5 datetime columns, can save us a lot of space on the distant run.

Less storage needed, bigger precision, larger date range, SQL standard compliant…

Why on Earth would someone still use Datetime ??!!

But, then I started to dig deeper. And tried to search web to find experts’ opinion on this topic. I thought, there has to be something with Datetime2 (I mean, my boss usually knows what he talks about, hehe). So, I’ve found this discussion with quite well documented shortcomings of Datetime2.

Main issue is the lack of (simple) possibility to do basic math operations with dates, such as calculating the difference between two dates in days, hours, minutes, etc.

Here is one basic example with Datetime type:

SELECT CONVERT(DATE, GETDATE()+1) AS Tomorrow;

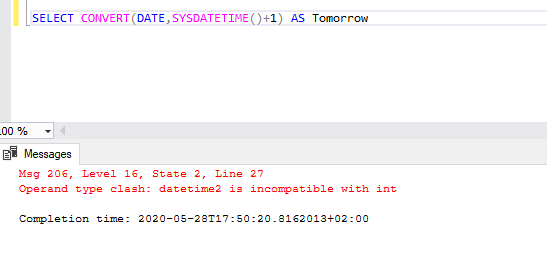

If you try to do the same thing with Datetime2, you will get an error:

SELECT CONVERT(DATE,SYSDATETIME()+1) AS Tomorrow;

Another really significant drawback is if you try to calculate 1st day of current month, which is quite common scenario:

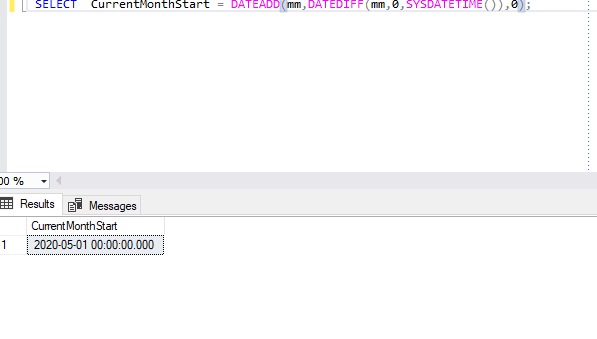

SELECT CurrentMonthStart = DATEADD(mm,DATEDIFF(mm,0,SYSDATETIME()),0);

Result is as expected:

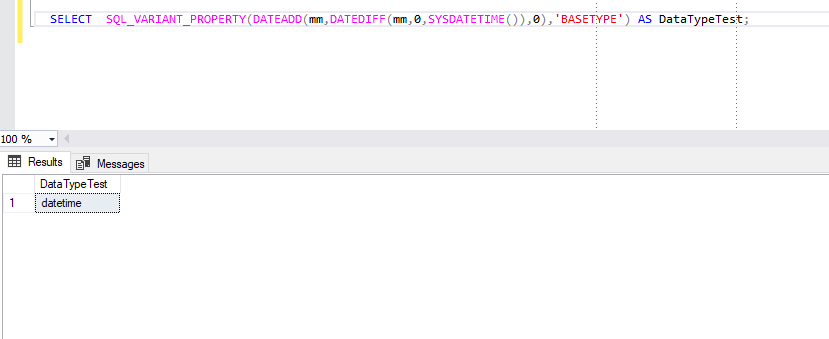

But, you wouldn’t expect that data type of this is: DATETIME, not DATETIME2!

SELECT SQL_VARIANT_PROPERTY(DATEADD(mm,DATEDIFF(mm,0,SYSDATETIME()),0),'BASETYPE') AS DataTypeTest

Which means that every time you are doing comparisons using these calculations, you will finish with implicit data conversion!

Another really important consideration comes from the fact that SQL Server can’t use statistics properly for Datetime2 columns, due to a way data is stored (remember reverse sequence of bytes previously explained). More on those issues in this great article written by Aaron Bertrand.

Wrong estimations lead to non-optimal query plans, which decrease the performance and this is one more significant drawback.

So, wrong estimations and bad query plans as a consequence, implicit data conversion in common use scenarios, impossibility to use basic math operations for date calculations…

Why on Earth would someone want to use Datetime2 ??!!

Last Updated on June 7, 2020 by Nikola

Dennes

Very Good, but also scary!

This is the kind of thing which can change on any minute, in a service pack or something like that, and many would not notice.

Nikola

Thanks for your comment Dennes. I agree with you, we should be careful and try to stay tuned for all adjustments.

Guy Glantser

Thanks Nikola!

I disagree with you. I think that DATETIME2 still has some important benefits, just like you mentioned – less storage, bigger precision, larger date range and being SQL standard compliant. I think that the only meaningful drawback of DATETIME2 is the bug in the cardinality estimator when associated with the DATEADD function. This is a big deal, but it can be avoided by using a DATETIME value in the relevant expression inside the DATEADD function, and then converting back to DATETIME2, just like Paul White suggested.

Nikola

Hi Guy,

Thanks for your comment.

I agree with your disagreement:)…I was also wholeheartedly on Datetime2 in the beginning, exactly because of all advantages you mentioned, but in the end I’m not sure which type is “better”. Hmmm, for larger date range and being SQL standard compliant, I don’t consider these as so significant advantages, since I really don’t see many scenarios when that can make big impact (of course, maybe I’m just assuming wrong). But, for bigger precision and memory consumption – fully agree with you, those are really important advantages, which one should seriously consider when making design decisions.

I guess, as in 99.9% of situations when it comes to SQL Server, the correct answer is: “It depends:)”

Thanks again for your opinion, really appreciate that.

Eitan Blumin

I think the bad statistics estimations should pretty much be a deal-breaker here…